The intersection of Tech & Cinema

Last weekend I lost my mind and participated in a 48 hr film festival where you write, film and screen within a weekend.

I used this opportunity to test out a concept that in short time will be the future of cinema production…. Using big data to produce cinema.

Big data is a buzz word that basically means you over collect a sh*t ton of data and then use a supercomputer to make it useful. We use this technology everyday through apps which interact with big data on the web to provide directions, recommend a restaurant etc. etc.

In cinema, big data is primarily used by video distribution companies like Amazon, Netflix and Hulu to recommend movies based on geography and past activity. But this is all changing.

Companies like Lytro are making light field cameras that allow for directors to change focus, lighting and composition in post production. This is achieved by recording not just the intensity of light (as in traditional cameras) but also the direction of each photon. In a VR environment this data can be rendered in real time to provide 6 degrees of freedom or in other words… the illusion of movement. Unfortunately I couldn’t get my hands on a light field camera so I used a 360 camera for the following experiment.

The top 3 reasons to shoot a traditional film with a 360 camera

- To speed up production: No waisted time dealing with multiple shots to get a different look. Because you’re composing your shots in post… you can focus on performances and design the shots later.

- To reduce cost: Speeds up production by eliminating the overhead of relights and camera moves. Sure you’ll have to do it in post but the personnel multiplier is greatly reduced.

- Creative Freedom: Composing shots in post allows camera moves that are simply otherwise impossible. An added bonus is the ability to make a VR version of your film.

A case study: “Another Again”

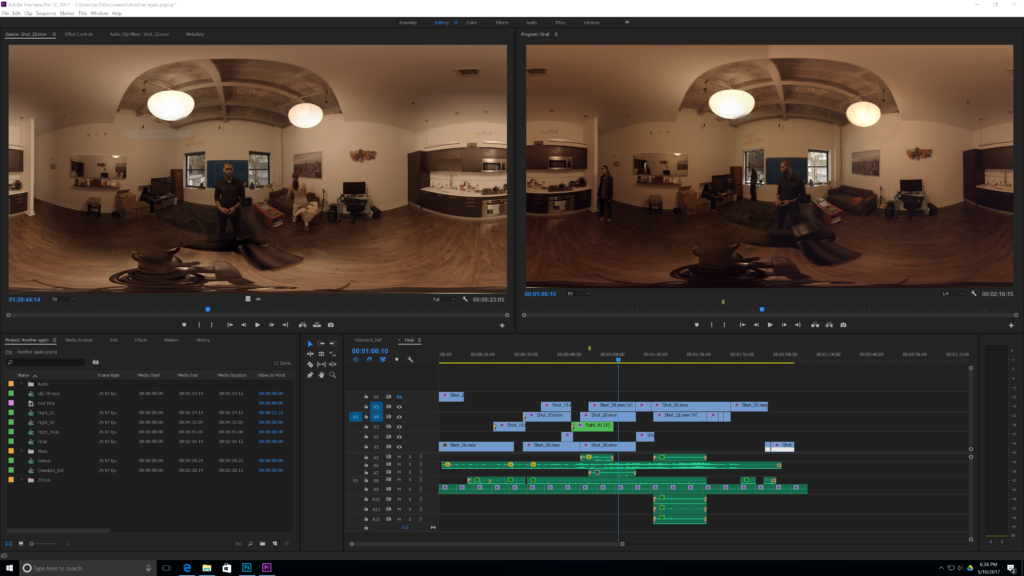

This film was shot with a 360 camera placed in the center of the room and without a cinematographer. All cinematography and camera moves were animated in post using Adobe Premiere and the GoPro Reframe plugin.

Above is the spherical edit in Adobe Premiere. Different takes were composited in layers which provided the basic special effects.

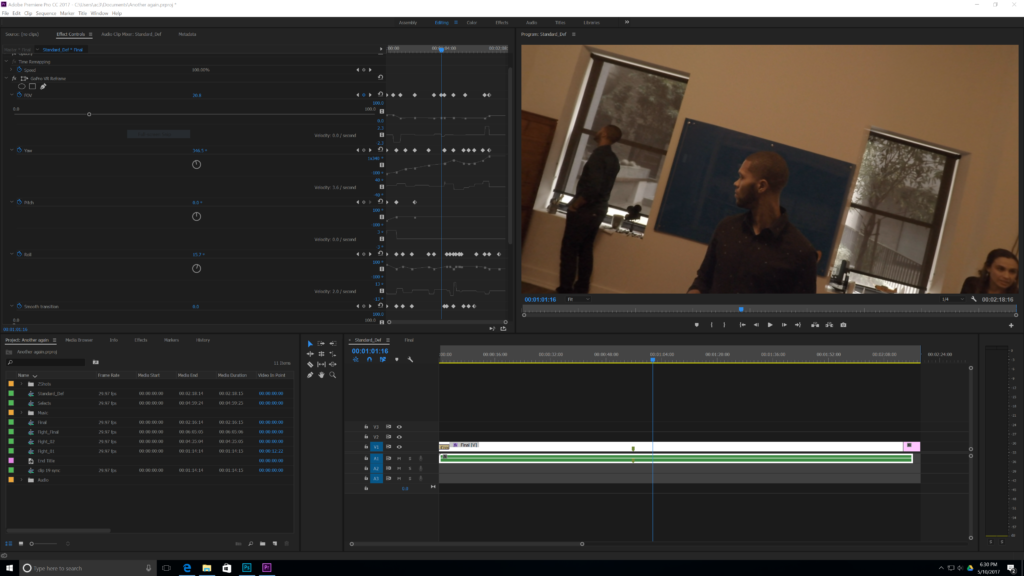

Next I used the GoPro reframe plug in on a nested sequence and animated the yaw, roll, pitch and field of view to produce the camera moves around the spherical video. In the upper left are the keyframes for the aforementioned parameters.

And this is just the beginning!

Part of what makes this exciting is that we’re just scratching the surface. This is a basic demonstration of the workflow of tomorrow… where big data allows for a creative explosion in cinema and VR. Light field VR cameras will capture the intensity and direction of all photons of light in the scene… enabling limitless creative decisions in post. Data will be sent to the cloud where super computers will crunch the data and only send back the info needed to render the scene to your liking. Now if only we could get storytelling to innovate as well 🙂

Leave a Reply